It’s true, some think that a robot apocalypse will one day lead to mass unemployment and the virtual extinction of all writers. Whereas there have been certain advents in the area of AI-powered writing, the results are mixed and limited mostly to writing that simply would not be done otherwise. Writers, here’s why you don’t have to fear for your jobs. Yet.

Mark Twain once humorously quipped that reports of his death were, well, greatly exaggerated. It’s one of the most recognizable quotes associated with Mr. Twain, and one that popped into my skull when I started pondering the future of writing in the era of robots. Thanks to everyone from Philip K. Dick to Amazon Alexa, it’s easy imagine a world where automatons punch out prose in a flurry of algorithmic excellence rendering the writer extinct and suddenly available for new work at the hands of occupational extinction. Writers have been around for many millennia, so it makes sense that one day this good run would come to an end.

But hold on.

I’m here to tell you that’s not going to happen. Not in my lifetime, at least. I’ve just spent time digging into the topic, analyzing automated writing bots… and I want you to know your job is safe. For now. Whereas automated writing is a thing when it comes to creating data-driven stories, most of these passages are still somewhat discernible from sentences created by a human being, with a pulse, whose specialties have long been emotion, empathy, subtext, context, nuance, research and the ability to report.

All still alive and well.

But here’s the thing: Robots are catching on. In this regard, I’ve plucked three examples where AI is doing a job formerly dominated by humans. Areas where automation have created opportunities for content companies to deliver copy in places where they might not hire a writer at all, proving not all computer-generated writing is bad if it wouldn’t otherwise exist. Even if it is less inspired than say, words with a living, breathing soul behind it (i.e., a talented scribe who agreed to take a job). I’ll leave it to you to decide if there are blind spots showing why some things can’t be replicated by replicants. Why algorithmic regurgitations aren’t always replacements for the human touch, where subtlety, humor, insight and word play can add value for ravenous readers.

So let’s get into it… Here are three examples of the good, the bad and the ugly when it comes to robot-powered journalism.

Example #1: The Good

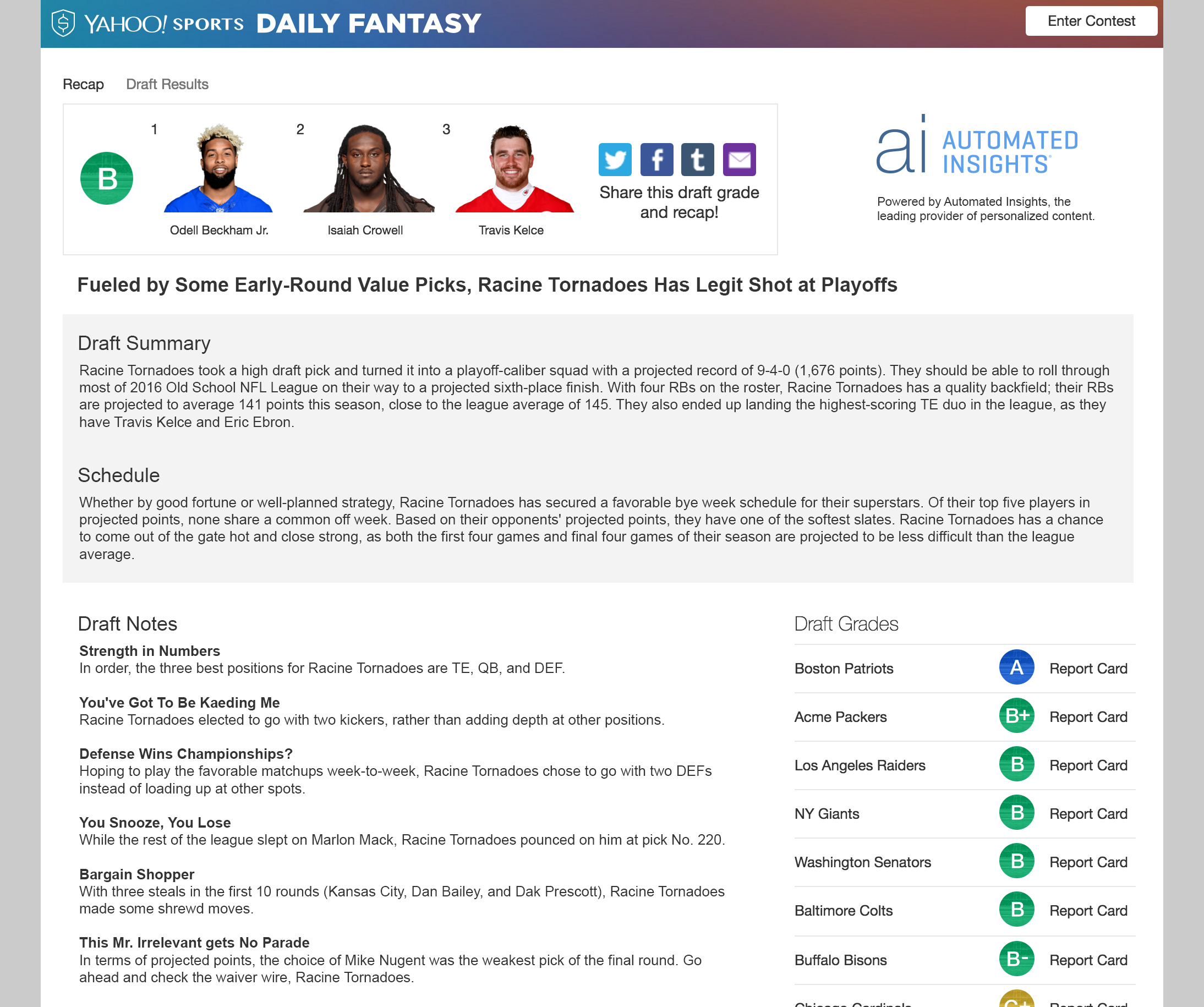

If you’ve ever owned a fantasy football team with Yahoo, then you’ve probably come across draft reports, matchup previews, and recaps that give you all sorts of substantial tidbits that you can then use as fodder for smack talking opponents (aka your buddies). These insights are custom-crafted around your team (based around a set of data inputs) and even lend a snarky tone in areas that make it seem as if you have your very own beat writer covering your squad to an extent. In fact, you do not.

Here’s an example of an automated draft recap courtesy of Wordsmith, the self-proclaimed “world’s most powerful natural language platform” from Automated Insights:

Because of the ravenous appetite of fantasy football owners and the sheer numbers of people who own teams, an automated write-up like the one above makes sense — as it lends an air of fun customization to the fantasy football ownership experience. These write-ups, which provide instant gratification to devout data lovers, simply could not happen otherwise given the millions of teams that form with the arrival of every new season.

In fact, over 70 million fantasy football player recaps have been written to date for Yahoo! alone, amounting to “over 100 years of incremental audience engagement by working with Automated Insights.” That’s not just smart, but AI-intelligent and a cool development that won’t put you, nameless writer friend, out of a job.

Cool Factor Grade: A

Example #2: The Mediocre

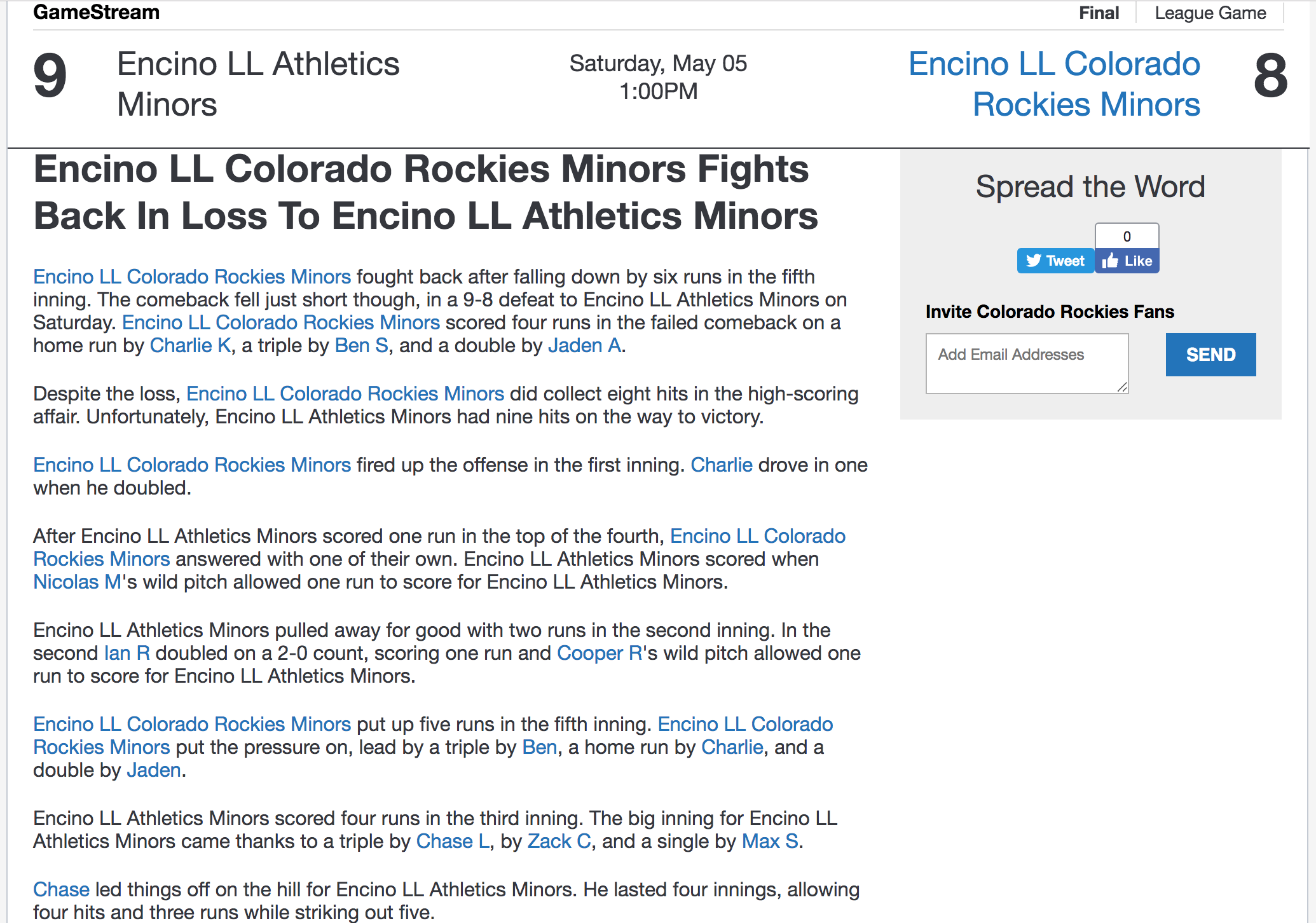

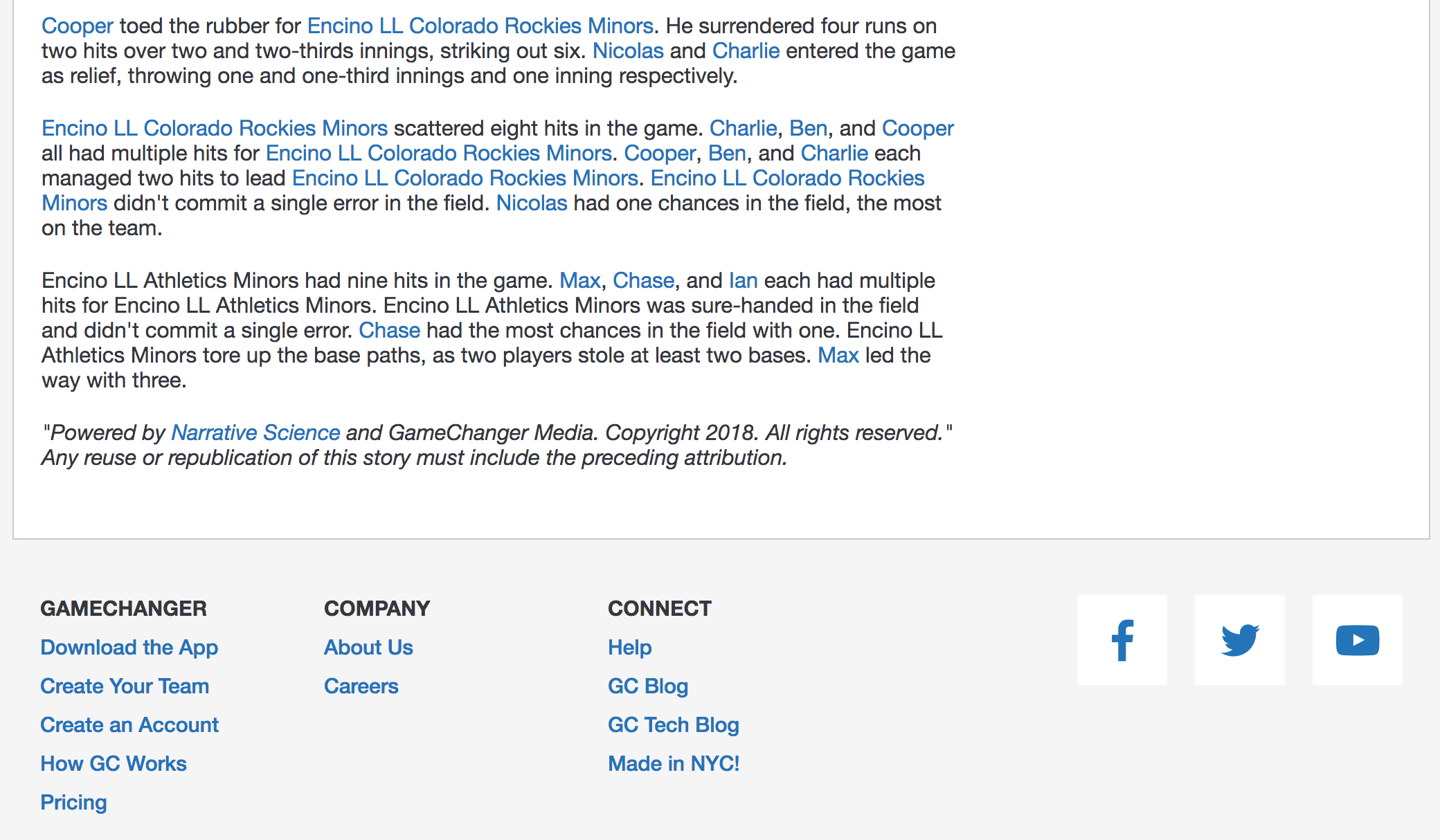

While we’re on the topic of sports, the next instance of automated writing comes to us from your friends at Narrative Science. More specifically, from an app they power known to parents of kids who play Little League Baseball in America: GameChanger. Essentially, it’s an app that let’s you track, keep stats and scores for baseball games so people can follow along in real-time.

Here’s an example of an auto-generated GameChanger recap from one of my son’s recent Little League games:

The above example represents the good parts of automated “journalism”… and the bad. The good? The unique factor. Since no news outlet in their right mind is going to dedicate a resource to covering a little league game for 10-year-olds, this recap simply would not exist if it weren’t for this app, whose “technology interprets your data, then transforms it into insightful, natural language narratives at unprecedented speed and scale.” Most notably, it is cool that a game played by those who just learned to read (and barely have time to do it in between Fortnite sessions) is getting a treatment akin to a story on a local sports site.

That said, I use the word “akin” loosely here. What’s missing here are the little details and nuances of the game that got lost in translation and consequently, were left out of the recap. The color, if you will. So much so that anyone who was at this particular game would laugh at the omissions from this description. Specifically, the phrase “failed comeback.” The part where our team, the Rockies, almost pulled off the comeback of the season in the last inning, after being down 9-3 (in a game we technically lost 9-8). What the fact-based rehashing above completely leaves out are the heart-racing elements of the game, the managerial moves, the buzz in the stands, and most notably, the turn of events where the game was actually called by the umps with two outs in the last inning. That’s right, this game never officially ended due to a time limit — a nuance that isn’t reflected in the final write-up.

Hearts were broken (mainly the parents, kids and okay, mine). The opposing manager even polled the kids on his A’s to see if they wanted to finish the game regardless of the time limit. They respectfully declined. All key pieces to this particular story and details that breathe life into game recaps beyond just stats. No machine can capture “heartbreaking,” and even though this may be because of a limitation in the app, it’s a recap based exclusively around data-inputs (hits, pitching changes, and score tallies). Done much in a similar style to how the Washington Post’s AI-powered Heliograf auto-wrote hundreds of stories about the 2016 Rio Olympic Games and 2016 U.S. presidential election using data alone versus interpretation, prediction and analysis.

Cool Factor Grade: B-

Example #3: The Bad and the ugly

Whereas the glut of aforementioned CG content is passable when it comes to translating data sets into sentences (albeit not award-winning ones), there are also examples of automation that leads to writing that isn’t just noticeably bad, but also surprise… factually inaccurate. In June 2017, a system called L.A. Quakebot (designed to instantly report earthquakes by extracting “relevant data from the USGS report” and plugging it into “a pre-written template”) mis-reported a 6.8-magnitude earthquake in Isla Vista, Calif., that shook people not because the earth moved, but instead because there was no actual earthquake. At all.

As it turns out, someone was updating an old story about an earthquake that occurred in Santa Barbara back in 1925 and due to a computer bug, an automated story was generated in the L.A. Times immediately about how a 6.8-magnitude earthquake occurred triggering stories, and tweets about the fictitious event. Here’s the L.A. Times retraction about the tweet:

As it turns out, someone was updating an old story about an earthquake that occurred in Santa Barbara back in 1925 and due to a computer bug, an automated story was generated in the L.A. Times immediately about how a 6.8-magnitude earthquake occurred triggering stories, and tweets about the fictitious event. Here’s the L.A. Times retraction about the tweet:Please note: We just deleted an automated tweet saying there was a 6.8 earthquake in Isla Vista. That earthquake happened in 1925.

— L.A. Times: L.A. Now (@LANow) June 22, 2017

A bad omen, perhaps. Bad reporting at the hands of something supposedly artificially intelligent? Definitely. Reporting an earthquake that could trigger mass confusion and tsunami fears is the kind of robo-inspired “writing” that could qualify as, well, bad. Human errors happen, just ask the person who, earlier this year, caused an incoming ballistic missile warning to be sent in Hawaii, scaring the living sh*t out of Hawaiians and tourists for 38 minutes as the panic and horror of imminent death set in.

But we expect more out of our robots. Even if they are unpaid automatons, we just don’t expect screw ups. So, until they start to think like human beings — and write like them — I like our jobs are safe.

For now.

Cool Factor Grade: F

Please Note: This post was not written by a robot.

As a fun aside, try this quiz from the New York Times to see if you can tell the difference between human and computer-generated writing. I got 8 out of 10… and I just wrote a piece on this specific topic. Good luck!