Technical SEO is a lot like a city’s power grid. Even though you can’t live without it, many don’t understand how it works — and some aren’t quite sure what it even is. And that makes total sense because, like the power grid, it works out of sight, behind the scenes, to make your site work and improve visitors’ experiences.

In this article, we explore the world of technical SEO, its core components, and its profound impact on your site’s performance. Let’s start by getting comfortable with the basics of technical SEO. Then, its core components and inner workings will fall nicely into place.

Defining Technical SEO and Its Unique Role in Search

Technical SEO, simply put, focuses on the backend of your website, ensuring that search engines can effectively crawl, index, and rank your web pages. In that sense, it’s the foundation on which you build a successful SEO strategy. While it may not be as glamorous as creating engaging content or acquiring backlinks, it’s the backbone of search engine visibility.

The interplay between technical SEO, on-page SEO, and off-page SEO

Technical SEO doesn’t operate in isolation. It collaborates with on-page and off-page SEO efforts to deliver a well-rounded optimization strategy.

On-page SEO deals with content and keyword optimization, while off-page SEO involves link-building and social signals. Technical SEO ensures that your website is a well-oiled machine supporting these efforts.

For example, once you’ve fine-tuned your site’s crawlability — an element of technical SEO — search engines can more easily find the keywords you’ve used to improve your on-page SEO. In this way, technical SEO enables your entire content marketing strategy.

Core Components of Technical SEO

Let’s delve into the core components of technical SEO that make it an integral part of your website’s search engine performance.

Crawlability: Ensuring search engines can access your content

By optimizing crawlability, you make sure search engines can read — and ignore — your content as you’d like. Your crawlability strategy can focus on using robots.txt, managing your crawl budget, and identifying and fixing crawl errors.

Consider some examples of how these features of technical SEO work:

Robots.txt

Robots.txt is like a virtual “keep out” sign that instructs search engine bots on which parts of your site they should or shouldn’t crawl.

Suppose you have a section of your website that contains sensitive or confidential information, and you don’t want search engines to index these pages. You can use a robots.txt file to achieve this. Here’s an example of how to set up a robots.txt file to disallow crawling of a specific directory:

User-agent: * Disallow: /sensitive-directory/

In this example, the “User-agent: *” directive applies to all search engine bots, and the “Disallow: /sensitive-directory/” directive instructs them not to crawl any pages within the “sensitive-directory” on your website. This helps protect sensitive information from being indexed in search engine results.

Managing crawl budget

Crawl budget refers to the number of pages a search engine bot can crawl on your website within a given time frame. If your website has a large number of pages, you may want to prioritize which pages are crawled more frequently.

For instance, you want search engines to prioritize crawling and indexing your latest blog posts.

To do this, you can set up an XML sitemap that specifically lists your recent blog posts. This signals to search engines that these pages are a priority, and they should allocate a significant portion of their crawl budget to these pages. This optimizes the crawl budget for your most important content.

Fixing crawl errors

By fixing crawl errors, you ensure search engines can crawl your content and use it to rank your site in their results.

Suppose you discover that Google Search Console has reported crawl errors, specifically “404 Not Found” errors, for some of your web pages. To fix this issue, you should:

- Investigate the cause of the error: Check whether the URLs were mistyped or if there’s a problem with the linking structure of your website

- Redirect or update the links: If the error is due to URL changes or broken links, consider setting up 301 redirects from the old URLs to the new ones. Or you can just correct the broken links

- Resubmit your sitemap: After fixing the errors, resubmit your updated sitemap to Google Search Console. This helps search engines re-crawl and re-index the corrected pages

By using robots.txt, managing crawl budget, and fixing crawl errors effectively, you can improve your website’s technical SEO and overall search engine performance.

Indexation: Guiding search engines on what to display

By leveraging indexation, you take the reigns, telling search engines what to display in their results. XML sitemaps, noindex tags, and canonical tags should sit at the top of your indexation toolbox.

Let’s dive into how each of these works:

XML sitemaps:

XML sitemaps are like a roadmap for search engines. They provide a structured list of URLs on your website that you want search engines to crawl and index. Here’s how they work:

- Creation: You create an XML Sitemap, typically using tools or website plugins. This file lists all the essential URLs you want search engines to find, including pages, posts, images, and more

- Submission: You then submit the XML Sitemap to search engines via Google Search Console, Bing Webmaster Tools, or other search engine-specific tools. This informs search engines about the existence and priority of these pages

- Crawling: Search engine bots use the XML Sitemap to navigate and index your site effectively. It helps them discover and index your content more efficiently

- Updating: Whenever you add new content or make significant changes to your website, you should update your XML sitemap to ensure that search engines are aware of the latest updates

Noindex tags:

Noindex tags are HTML meta tags that instruct search engines not to index specific pages or portions of your website. You use them when you want to keep certain content out of search engine results. Here’s how to use them and how they affect your SEO:

- Placement: You place a noindex meta tag within the HTML code of the page or in the HTTP header. This tag tells search engines not to index that particular page

- Use cases: You might use noindex tags for pages like thank-you pages, login pages, privacy policies, or any content that’s not meant for public search engine results

- SEO impact: Pages with noindex tags won’t appear in search engine results, helping you avoid duplicate content issues and ensure that only valuable content is indexed

Canonical tags:

Canonical tags are also HTML meta tags, but they work differently than noindex tags. They help search engines understand the preferred version of a page when multiple versions of similar content exist. Here’s how to use canonical tags to your advantage:

- Placement: You place a canonical tag in the HTML code of a page, specifying the canonical URL you want search engines to consider as the authoritative version

- Use vases: Canonical tags are particularly useful for resolving duplicate content issues that can occur due to URL parameters, session IDs, or different versions of the same page

- SEO impact: When search engines encounter canonical tags, they identify duplicate content but then attribute the authority to the canonical URL you specified. This prevents search engines from penalizing your site for duplicate content

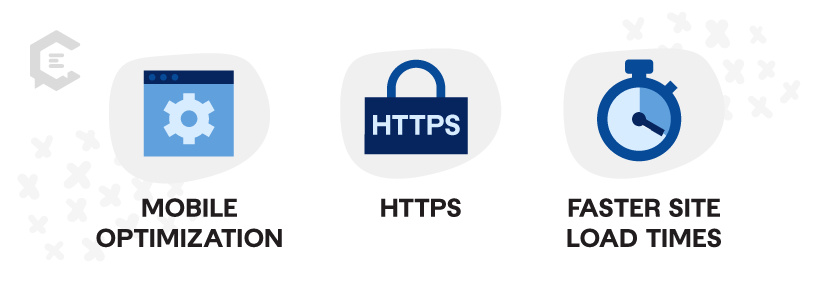

Website Architecture and Speed

You should optimize your site’s layout and speed so it’s easier for users to enjoy. Here are three of the primary factors that determine the most effective architecture and speed:

- Mobile optimization: Mobile optimization is critical due to the increased use of smartphones for web browsing

- HTTPS: HTTPS provides a secure connection, and it’s now a ranking factor because it enhances user safety

- Faster site load times: When your site loads faster, you not only improve your SEO but also give users what they want to see faster

Impact of Technical SEO on User Experience

The user experience isn’t just about design and content. Technical SEO plays a vital role, especially when it comes to how users benefit from your site structure and the speed and mobile performance of your pages:

- Site structure and navigation: An organized and logical site structure makes it easier for users to find what they’re looking for. Intuitive navigation can reduce bounce rates and increase user engagement

- Enhancing page speed and mobile responsiveness: Slow websites can deter users, and Google’s mobile-first indexing means mobile optimization is no longer optional

Diagnosing and Addressing Technical SEO Issues

By identifying and addressing technical SEO issues, you can maintain and improve your website’s search performance.

Popular tools for technical SEO audits

Several tools can help you identify and fix your site’s issues, including:

- Google Search Console, which tells you how Google crawls and indexes your site and also identifies potential security issues.

- Screaming Frog imitates how search engines crawl your site and then pinpoints ways you can improve its performance.

- Semrush checks for issues like broken links, crawlability problems, duplicate content, and issues related to page speed. It then makes recommendations about how to fix any problems it discovers.

Gazing into the Future: Trends in Technical SEO

Technical SEO is evolving, especially due to Core Web Vitals, AI, and voice search.

Growing importance of core web vitals

Google’s Core Web Vitals are increasingly influential in rankings. They are a set of specific metrics that measure key aspects of user experience on your site. Google introduced them as part of its broader effort to improve the quality of web content and ensure that users have a smooth and enjoyable experience while browsing.

Technical SEO in the age of AI and voice search

Voice search, powered by AI virtual assistants like Siri, Alexa, and Google Assistant, is changing how people search for information online.

Voice searches are more conversational and often longer than text-based queries. Technical SEO must adapt to these natural language searches by optimizing for long-tail keywords and phrases.

Voice assistants often read featured snippets (position zero results) in response to voice queries.

Webmasters should aim to secure these coveted featured snippet spots. For many companies, this is relatively straightforward: The key is to provide concise and informative answers to common questions.

The Enduring Relevance of Technical SEO in a Dynamic Digital Landscape

Like the electrical infrastructure of your city or town, it’s easy to take the technical elements of your site for granted. But technical SEO powers your site. You can use it to ensure you appear higher in search engine rankings and provide rewarding experiences for your visitors.

If you want to improve your content strategy, check out our managed content solutions or easily speak with a content specialist on how we can assist you in your content marketing efforts.